This page introduces my work on the development of the Discussion Skills Assessment (DSA) instrument and its current status at the time of writing.

Historical Development and Rationale

The student-led seminar approach I have been pioneering in different educational contexts was first inspired by a student-led seminar activity that formed part of the University of Bristol’s 2016 pre-sessional English for Academic Purposes course. Since then, I have introduced the approach in a different and more integrated way on three other UK university courses and I have been developing an assessment instrument to measure student performances in the seminar sessions. The original assessment instrument was very simple with only four criteria. This has gradually evolved over a period of four years in response to teacher and student feedback and the results of a focused multi-stage research project. The rationale for pursuing this study is threefold:

- There has been very positive feedback from students and an evident increase in student engagement.

- The results of statistical analysis of the instrument data indicate that it may be a robust assessment tool.

- This approach offers a means to accommodate AI as a learning tool without it causing academic misconduct concerns.

For further information on the development of the DSA, you can read the published articles below.

Academic Publications

For more details on the development of both the student-led approach and the DSA instrument, please check out these two articles:

The Current DSA Instrument

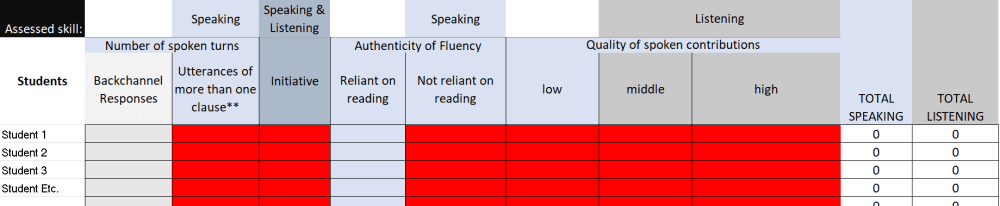

Clark & Terrett’s (2024) results, and our subsequent research still awaiting publication, have produced the following assessment criteria contributing to the DSA:

| Assessed Skill | Criteria |

| Speaking | Utterances of more than one clause |

| Authentic Fluency: Not reliant on reading | |

| Speaking & Listening | Initiative |

| Listening | Quality of Contributions: Mid |

| Quality of Contributions: High |

The scoring is carried out on an Excel sheet while the students are speaking and the scores are colour-coded in a traffic light system to aid student reflection and feedforward as shown in the screenshot below.

I have put this on a downloadable Excel spreadsheet which is ready to use: DSA TEMPLATE

SCORING

Each time a student speaks for more than one clause, they are awarded a score for:

- ‘utterances of more than one clause‘;

- ‘initiative‘ if they volunteered to speak (the score for initiative is forfeited if they just wait until called on);

- ‘Not reliant on reading‘ if the student wasn’t just reading from notes or PPT, etc. (‘Reliant on reading‘ is not part of the score but is included on the DSA instrument because it has proved useful as part of the feedforward process);

- One of the levels of ‘Quality of Spoken Contributions‘, with ‘Low‘ being for stating facts, repeating points, etc. (‘Low‘ does not contribute to the total score because this does not require any listening and could simply be a student reading from an AI generated script). ‘Middle‘ is awarded for describing, explaining and giving examples related to what other students have said. ‘High‘ is awarded for comparing, challenging and evaluating.

- Backchannel responses can be awarded for short mono-clause and one or two word utterances. These tend to be the leaders calling on others or students spontaneously expressing agreement/disagreement. I am collecting this information because it is clearly strongly related to the progression of the discussion and I want to identify whether this ought to be included in the final score.

Advice Regarding the Student-Led Seminar Approach

The student leaders of the session should be prepared to initiate the discussion with questions or other prompts while the teacher records the students’ utterances on the DSA. For a more detailed guide, see page 44 of the Terrett (2024) article listed above.

After every seminar I ask the students to complete a very careful reflection (LINK TO TEMPLATE) and in this way, students are empowered to make changes to the way we hold the seminars and the instrument itself. Indeed, some of the assessed criteria in the current version of the DSA instrument come from students themselves.

The following is a list of recommendations and advice that has come from students feedback over three years of running these student-led seminar sessions:

- Only speak one at a time and respect the speaker

- Leaders should manage the discussion so that as many students as possible get the chance to speak. They shouldn’t take it as an opportunity to just present information themselves.

- Leaders will likely need to pick on shy or introverted students (i.e. try to get quiet students to join the discussion by forfeiting their ‘initiative’ score).

- Images, data, polls and realia tend to prompt the most contributions and interesting discussions.

- Students should listen to each other and respond to the points raised by other students rather than just trotting out their pre-prepared answers. This will help students achieve better scores (mid and high quality of contributions) and advance the discussion.

- Students should be prepared for the seminar discussion, but Clark & Terrett (2024) discovered that scores for ‘evidence of preparation‘ corrolate with lower speaking scores, which is why ‘evidence of preparation‘ is no longer part of the DSA. This somewhat counterintuitive finding can be explained by students who prepare detailed written answers lacking the flexibility to adapt their ideas to the direction of the discussion. This is an important finding because it emphasizes the importance of understanding the target concepts of the discussion over limiting one’s understanding to a prepared speech. For the same reason, this approach can help students to use AI tools appropriately because obtaining an AI script without understanding will limit the student’s score on the DSA.

Samples of Student Feedback

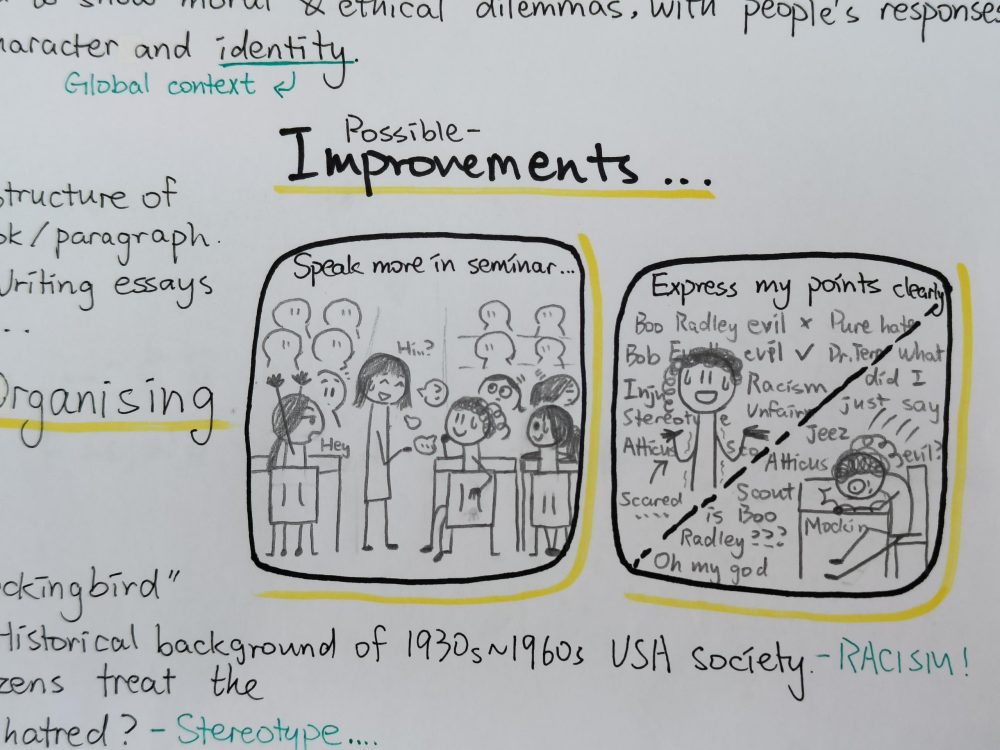

Although I have not completed any formal research specifically looking at this area, it is very obvious to every teacher who has been involved that this student-led seminar approach massively increases student engagement. This is further supported by a great many student reflections and feedback. Here are some recent samples I received at the time of writing this blog post:

If you would like to see more student seminar reflections, you can check out my grade 8 students’ blog from the 2023-2024 academic year: https://mushroom-scholars.org/topics/seminar-reflection

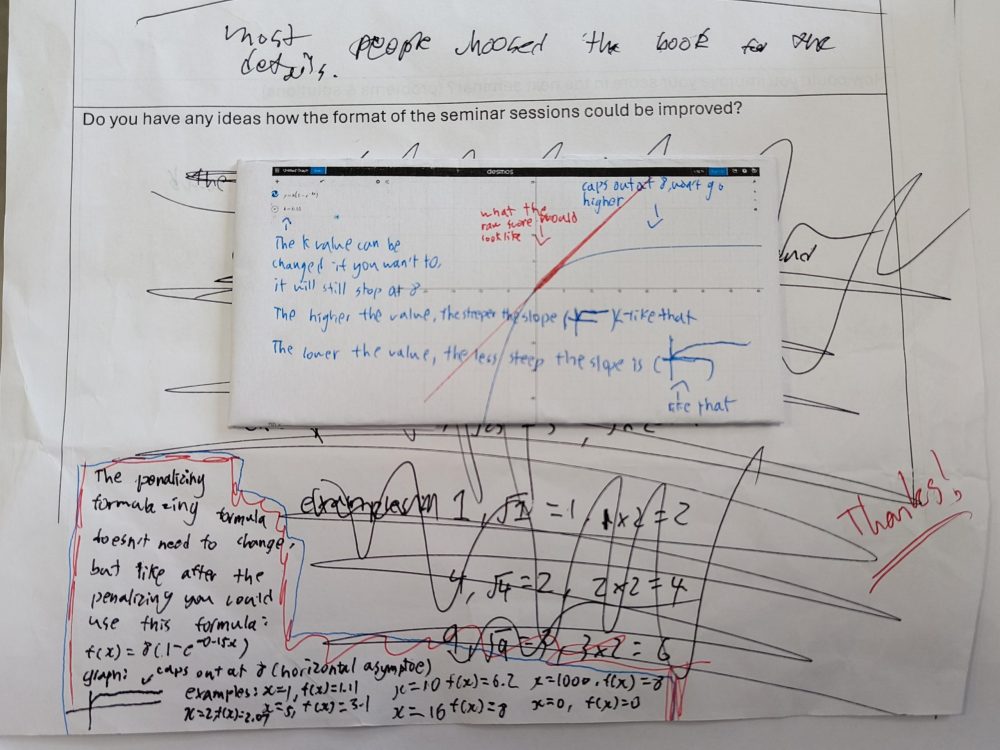

Collecting and responding to student feedback has been a very important part of the development of both the student-led seminar approach and the DSA instrument. The criteria ‘Initiative‘ and ‘Authenticity of Fluency‘ came partly from student concerns about the fairness of the scoring system. Towards the end of last year, students also expressed concern that the current system unfairly rewards students who dominate and reduce the quality of the discussion by contributing a lot of ‘Low Quality of Spoken Contributions‘. We started work on an algorithm that would calculate a penalty for students based on the volume of ‘Low Quality of Spoken Contributions‘ if students total contributions are over a certain threshold. This work forms part of my current research and students are continuing to help with this endeavour this academic year (2024-2025).

Current Research

I am satisfied that the DSA in its current format can successfully measure speaking and listening ability in a classroom discussion context with up to 25 students, although for class sizes with more than 15 students, more time would need to be allocated compared to those with fewer students to ensure everybody has the time to speak a few times.

However, I am teaching IB MYP English Language and Literature (ELL) and speaking and listening scores are not directly transferable into IB ELL scores. This issue is obviously true of other disciplines too. I am therefore currently trying to work out an algorithm that converts the ‘Low, Mid and High Quality of Spoken Contribution’ scores into an ‘evidence of participation and understanding score’ that I could usefully convert into IB MYP scores. If this proves successful, then the DSA instrument may not be limited to assessment in the field of English as a foreign or second language and may be applied to other disciplines.

I have also been using the DSA instrument to record backchannel responses, which are defined as utterances that are too short to qualify as a scoring utterance. I currently have a few students who are interested in helping to research this to determine whether this can or should form part of the speaking or listening scores. There is no doubt that the backchannel responses help the discussion to progress, and they have so far proved to be statistically significant in their relationship with pretty much every other criterion, so it seems to be worthy of further study.

I am also considering conducting a formal study on the student feedback, and possibly trialing the student-led seminar approach and DSA in Chinese classes.